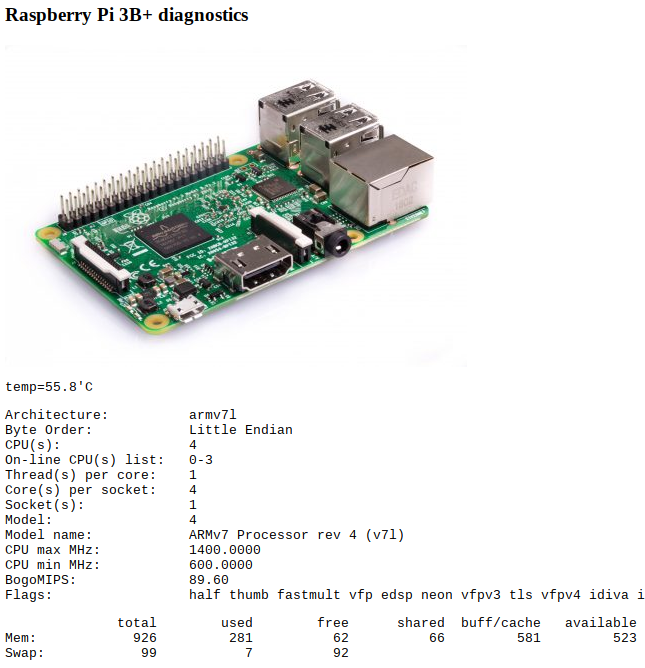

Raspberry Pi 4B has been released recently, and it’s the first such device that might actually be usable as a general-purpose desktop PC.

I don’t know yet what the Geekbench score is, but it has 4GB RAM, can drive two 4K monitors, is 2+ times faster than the 3B+ model, has gigabit Ethernet and USB3, essentially making it an ideal cheap and secure device for running general purpose office/school applications.

I ordered one and will report how it does running Linux desktop and my typical workload. In theory, it’s the first one that actually has enough power to rival a NUC for lightweight HTPC and desktop tasks.

Update after receiving and briefly testing the 4GB unit:

Geekbench 2 (ARM build) is 4830. The score of the 3B+ is 2266.

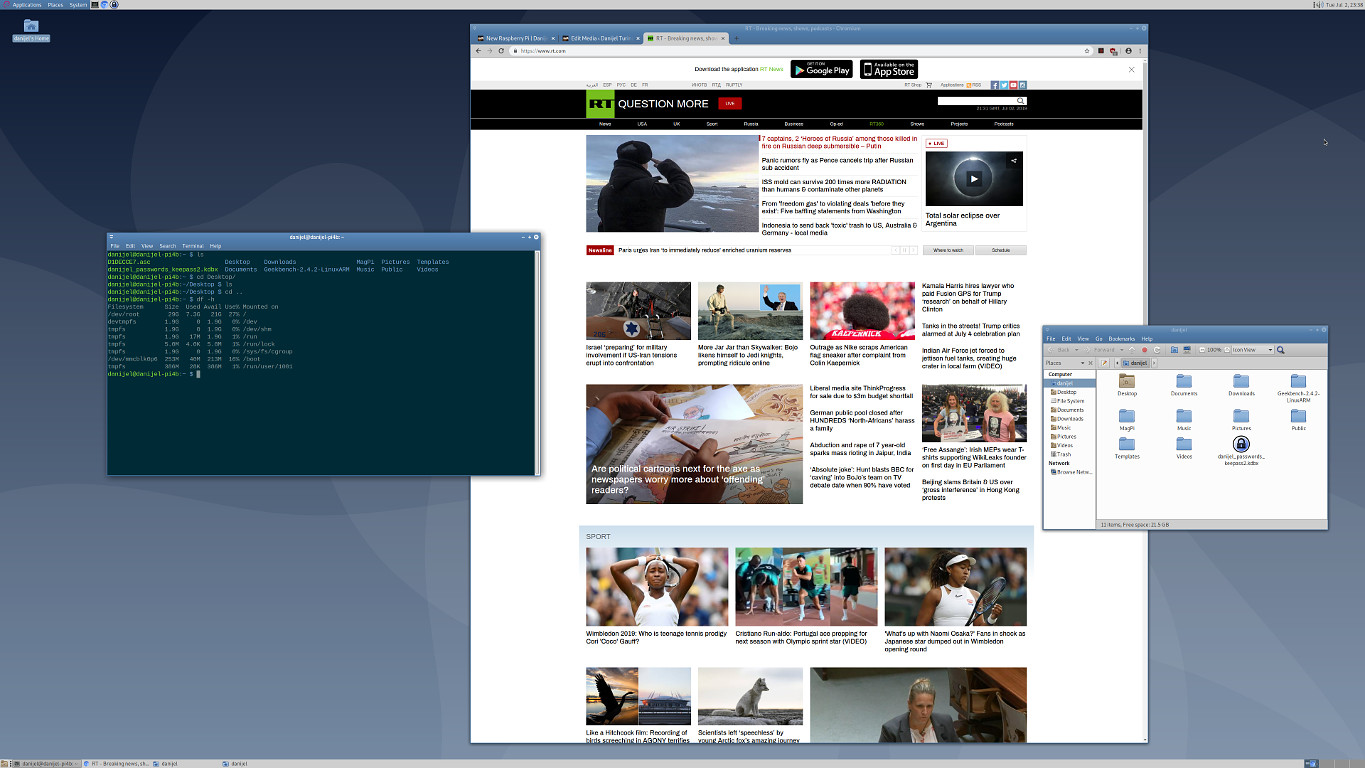

Subjective speed is comparable to my media player, Core2Duo E6500@2900MHz, which means it’s quite usable, since that used to be my desktop machine; the speed is not up to today’s standards, but it’s not stone age. I’m using it to write this article and the speed is fine, it’s a normal desktop computer.

kde-plasma-desktop package in raspbian made a mess, and is unusable, so I’m using the default raspbian window manager. Raspbian is incredibly breakable; after attempting to install multiple window managers, everything broke in many different ways, for instance raspi-config fails to set a valid boot to GUI or boot to CLI configuration; it just does whatever, and when I startx, it complately bypasses lightdm/sddm and opens whatever (at first Raspbian default GUI, but later Mate desktop, without the ability to switch between the two. It’s simply not ready for “normies”. Window manager switching should either not work at all, or work well, without conflicting daemons/applets, and reliably selectable through either GUI or CLI. I can’t believe I have to even say this.

The video works marginally OK when I use the legacy open-gl driver in raspi-config. 720p video works ok, only 9 dropped frames of 2800. Everything above 720p is not smooth. The mouse moves better now too.

Mate desktop is much, much better than the default Raspbian GUI. Normal things such as the volume buttons actually work. This machine should have Ubuntu Mate as the desktop OS, and Raspbian should be left for tinkering with hardware and emergency use only. Mate desktop, however, is good enough for normal desktop use. For instance, I couldn’t make Raspbian GUI make my mouse work non-sluggish; in mate-desktop-environment it just works. That also goes for the volume control buttons on both keyboards I tested. I could get used to this.

It’s prone to overheating. I got a high temperature icon repeatedly while working at the Raspbian desktop while performing apt-get install of a large dependency tree. The temps were above 80°C with alu heatsink glued to the CPU but plastic top of the case closed. I opened it now and the temps while just typing this are 66°C.

I plugged the USB3 powered hub from the desktop to the Pi and it just worked, plug&play, with all the devices.

There’s some super-weird shit going on with overheating. For instance, I forgot a Kingston USB drive in the device, and when I wanted to remove it, it was hot, like, incredibly hot. I can’t remember whether that was the case with 3B+ but this isn’t normal, since the drive was idling, and not copying the universe.

The CPU temperature is now 62-66°C, which is about ten degrees more than 3B+ in similar workloads. This CPU needs stronger cooling, and that’s normal since it has the power of an E6500 which has a regular PC heatsink with a fan, and this has a small passive heatsink.

The video drivers are generally the weakest spot of the OS so far, from what I can tell. All kinds of artifacting is going on while video is playing; mouse pointer hiding and showing, browser randomly redrawing, that kind of crap. It’s alpha release. I don’t think the hardware acceleration is turned on at all. There needs to be a Raspbian update having the 4B in mind, because from what I recall 3B+ actually has better YouTube video.

To repeat myself, there needs to be an OS fork for Pi devices: one for tinkering with hardware, for which Raspbian is great, and one for desktop use, for classrooms or similar, and that one needs to be polished. Ubuntu-Mate seems like an awesome candidate, although I would also like to see kde-plasma-desktop working.

I am testing it on a 4K 43″ monitor, with a mechanical keyboard and Logitech G602 wireless mouse plugged into a powered USB3 hub, and it’s a very comfortable desktop experience, until I get an idea of playing video. That part just doesn’t work well and needs to be fixed in a Raspbian update. This hub also provides the power for the Pi; I also tried a 45W USB-C Asus laptop brick, and Apple iPad brick. The iPad brick was the only one not providing enough power; I had constant undervolt notifications and at one point device actually crashed during a power peak when starting Mate. Have this in mind; this requires a netbook-level power brick, not a phone or tablet-level one. This is not your old Raspberry Pi that could run from a computer’s USB socket and be fine. The power demands are still nowhere near any kind of a x86 desktop computer, but it matches the small and frugal laptops. The overheating has apparently been resolved once I removed the top cover on the case. It would actually make good use of a slow case fan blowing on it, but a high-RPM small fan would be terribly counterproductive. The solution I would prefer would be this:

Aluminium case design where the entire top part of the case is a heatsink would be quite appropriate for a machine of this power, because if you close it inside an un-ventilated plastic enclosure it will melt itself to death, and if it’s left open it can be damaged in all sorts of ways in a classroom environment. Essentially, I’d install it in a VESA mounted enclosure with a large heatsink, and either extend the GPIO with a flat cable to some accessible spot on the monitor stand, or just forget about GPIO for desktop use; have a 4B model for driving a desktop environment, for coding and web/office stuff, and one small, cheap A-type unit for driving sensors and robotics. You’ll do the development/deployment/testing over a ssh connection in any case, it’s just a matter whether you do the development on a “proper” desktop PC, or a desktop-level Pi. As far as I’m concerned, 4B needs a software update that will fix its video problems, and make a mate-desktop-environment a default option in Raspbian: well tested, polished and not conflicting with the unnecessary LXDE and whatever GUI that used to make sense on the older generations. This one needs a choice between Mate, XFCE and KDE, not between SHIT and CRAP. Yes, this is high praise coming from me, and means the device itself is quite excellent for the intended purpose. With proper cooling, properly implemented video codecs and some OS polishing, this could be the ideal classroom computer: cheap, small, integrated into the monitor for robustness, and fast enough to run everything kids would need to learn. And it’s cheap enough you can equip classrooms with it even in the financially not so well off schools that can’t afford i3 or i5 desktops. So, thumbs up, but with a caveat regarding the OS which is obviously an alpha-release considering the needs of this device. I can hardly wait for Ubuntu Mate to be compiled and tweaked for 4B.