I’ve been watching some YouTube videos about restoring old computers, because I’m trying to understand the motivation behind it.

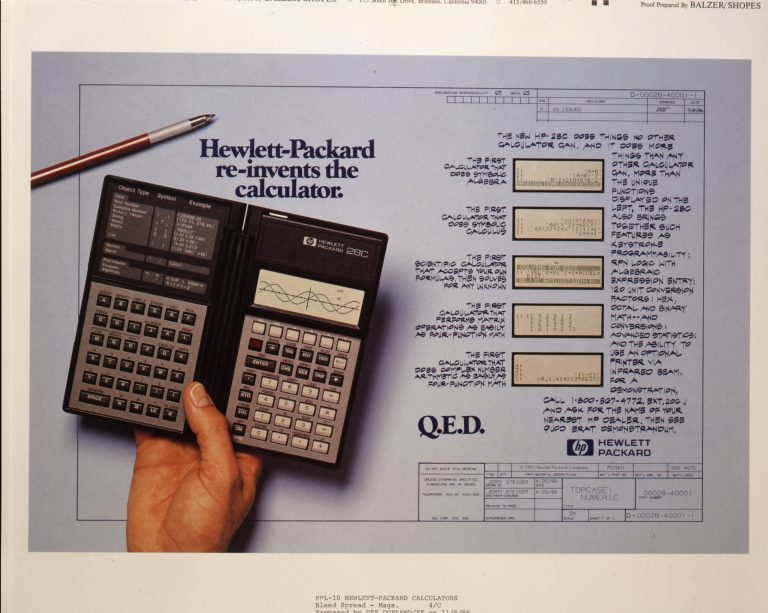

Sure, nostalgia; people doing it usually have some childhood or youth memories associated with old computers and restoring those and running ancient software probably brings back the memories. However, I’ve seen cases where an expert in restoring ancient hardware was asked to recover actual scientific data recorded on old floppy disks (IBM 8”), stored in some data format that was readable only by an ancient computer that no longer exists, and it was an actual problem that had to be solved by getting an old floppy drive to connect to an old but still reasonably modern computer running a modern OS, and communicating with the drive on a low enough level to access the files and then copy them to modern storage. Also, they recovered ancient data from the Apollo era by using a restored Apollo guidance computer to read old core memories and copy them to modern storage for historical purposes. Essentially, they recovered data from various museum pieces and established what was used for what purpose. They also brought various old but historically interesting computers, such as Xerox Alto, to a working condition, where all their software could be demonstrated in a museum. So, there’s the “computer archaeology” aspect of it that I do understand, and that’s perfectly fine. However, it’s obvious that most old computers that are restored end up being used once or twice and then moved to some shelf, because they are not really useful for anything today. The interesting part is, there are some very old machines that are being actively used today, and they actually do the job so well there is no reason for replacing them with the new equipment, because they obviously do what they are designed to do perfectly (for instance, supervising a power plant or running a missile silo) and since modern hardware doesn’t run the old software, you can’t just replace the computer with a new faster model that you plug into the rest of the system. No; the interfaces are different now, everything is different. You can’t just plug the modern workstation PC in place of a PDP 11. You’d need to move all the data from tape drives and 8” floppies and old hard drives first. Then you’d have to replace the printers and somehow connect to the old peripherals, for instance the sensors and solenoids. And then you’d have to rewrite all the old software and make it so reliable that it never breaks or crashes. And the only benefit of that would be to have more reliable hardware, because the stuff from the 1970s is 50 years old and breaks down. It’s no wonder that the industry solved the problem by simply making a modern replacement computer with all the old interfaces, with modern hardware running an emulation of the old computer that runs all the ancient software perfectly, so that it keeps doing what it was designed to do but without old capacitors and diodes exploding. There are examples of this approach that made their way to consumer electronics – for instance, modern HP 50G or HP 12C calculators have an ARM CPU running emulation of obsolete proprietary HP Voyager and Saturn processors, running all the software written for the original obsolete platform, because rewriting all the mathematical stuff in c and building it for a modern micro-controller platform would be prohibitively expensive since there’s no money in it. However, simply using modern hardware, writing an emulator for the old platform, and using all the legacy software works perfectly fine, and nobody really cares whether it’s “optimal” or not. Now that I think about it, there must be tons of legacy hardware embedded in old airplanes and similar technological marvels of the time, that are still in use today, and maintaining the aging electronics must be a nightmare that can’t be solved by merely replacing it with the new stuff. In all such cases, emulating the old hardware and running everything on an ARM or building a gate-accurate FPGA replica and just connecting all the old stuff to it to buy time until the entire machine is retired, is quite a reasonable solution to the problem. There must be a whole hidden industry that makes good money by solving the problem of “just make a new and reliable computer for it and leave everything else as it is because it works”.

So, I can imagine perfectly well why one would keep a PDP 10, VAX 11 or IBM 360 running today, if the conversion to a modern platform is cost-prohibitive. However, that got me thinking, what’s the oldest computer I could actually use today, for any purpose.

The answer is quite interesting. For instance, if I had a free serial terminal, VT100 or something, and had a workshop with a Raspberry Pi or some other Linux server, I could connect the ancient terminal to it and display logs and issue commands. It could just work there for that single purpose, and perhaps be more convenient than connecting to the linux server with my modern laptop in a very filthy environment. However, I don’t really know what I would do with a much more modern machine, such as an original IBM PC, or the first Macintosh. They are merely museum pieces today, and I can’t find any practical use for them. So, what’s the next usable generation? It would need to have connectivity to modern hardware in order for me to be able to exchange data; for instance, I could use a very old laptop as a typewriter, as long as I can pull the text I wrote out of it and use it on a modern machine later on. Ideally, it would have network connectivity and be able to save data to a shared directory. Alternatively, it should have USB so I can save things to a thumb drive. Worst case, I would use a floppy disk, and I say worst case because the 3.5” 1.44MB ones were notoriously unreliable and I used to have all kinds of problems with them. It would have to be something really interesting in order for it to be worth the hassle, and I’d probably have to already have it in order to bother with finding a use for it. For instance, an old Compaq, Toshiba or IBM laptop running DOS, where I would use character-graphics tools, exclusively for writing text.

But what’s the oldest computer I could actually use today, for literally everything I do, only slower? The answer is easy: it’s the 15” mid-2015 Macbook Pro (i7-4770HQ CPU). That’s the oldest machine that I have in use, in a sense that it is retired, but I maintain it as a “hot spare”, with updated OS and everything, where I can take it out of a drawer, take it to some secondary location where I want a fully-functional computer already present, not having to assume I’ll have a laptop with me. When I say “fully functional”, I don’t mean just writing text, surfing the web or playing movies, I mean editing photos in Lightroom as well. The only drawback is that it doesn’t have USB C, but my external SSD drives with photo archive can be plugged into USB A with a mere cable replacement, so that would all work, albeit with a speed reduction compared to my modern systems. So, basically, a 4-th generation Intel, released in 2014, is something I can still use for all my current workloads, but it’s significantly slower, already has port compatibility issues with the modern hardware (Thunderbolt 2 with mini-DP connectors is a hassle to connect to anything today as it needs special cables or adapters), and is retired, to be used only in emergencies or specific use-cases.

I must admit that I suffer from no nostalgia regarding old computers. Sure, I remember aspiring to get the stuff that was hot at the time, but it’s all useless junk now, and I have very good memory and remember how limited it all was. What I use today used to be beyond everybody’s dreams back then – for instance, a display with resolution that rivals text printed on a high-res laser printer, with the ability to display a photograph in quality that rivals or exceeds a photographic print, and the ability to reproduce video in full quality, exceeding what a TV could do back then. I actually use my computer as a HiFi component for playing music to the NAD in CD quality. Today, this stuff actually does everything I always wanted to do, but the computers were vehicles for fantasy rather than tools to actually make it happen. I can take pictures with my 35mm camera in quality that exceeds everything I could do on 35mm film, and edit the raw photos on the computer, with no loss of quality, and with no dependence on labs, chemicals or other people who would leave fingerprints on my film. So, when I think about the old computers, I can understand the nostalgia about it, but the biggest part, for me, is remembering what I always wanted computers to do, and feeling gratitude that it’s now a reality. The only thing that’s still a fantasy is a strong AI, but I’m afraid that the AI of the kind I would like to talk to would have very little use for humans.