Since Apple seems to be working very hard on alienating their Mac user base by introducing poorly designed “innovative” products with incredibly bad and unreliable keyboards, very breakable display cables, keyboards that rub on the display and abrade coating, and ports that would be excellent if everybody already used them for everything, which is not the case, I think I’m not alone in trying to figure out a plan B in case they just refuse to listen and keep releasing increasingly overpriced unreliable garbage.

Linux would be great on desktop if someone actually worked for real money on making it usable. So far, everybody just spawns distros that aren’t actually fixing the real issues, and I really tried making several of them work for me, but the list of issues is too profound for me to even get into. It’s a steaming pile of garbage designed to look good on screenshots and presentations, but which breaks apart when you try to actually use it. Also, Linux managed to alienate commercial software developers to the point where things don’t seem to have much hope of getting better.

Windows, on the other hand, has another set of flaws: updates are intrusive, frequent and tend to break the system ih a high percentage of cases. Also, it installs stupid games and other software without asking anyone, wasting space and bandwidth and annoying me in the process. Privacy concerns are significant. However, unlike Linux it actually runs all the software I need, and the hardware actually runs much faster under Windows than it does under Linux, no matter what the penguin geeks tell you. Windows 10 actually has the quickest boot out of all 3 desktop OSes, it has greatest hardware compatibility and the only thing it actually misses is the ability to run Unix console and software natively.

Or at least it used to be the case. Enter the Windows Subsystem for Linux (WSL). It’s basically something you turn on in Windows by running the following command in PowerShell (as admin):

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Windows-Subsystem-Linux

Then you reboot the system when prompted, and then go to the Windows store and install one of the WSL “distros”, such as Ubuntu, Debian, OpenSUSE, Kali, Arch, Fedora, or whatever. I’m using Ubuntu because I’m familiar with where stuff is. You install this “package”, open it and follow instructions. When it’s done creating your user account, you can install the service packages, for instance mysql-server, apache2, php, python and the like. Every shell application I tried works, except for nmap.

WSL, despite the name, doesn’t have much to do with Linux, since it doesn’t contain the Linux kernel; instead, it uses a translation matrix which translates Linux system calls to something Windows kernel can understand. It actually reports Windows kernel:

Linux DANIJEL-KANTA 4.4.0-17763-Microsoft #379-Microsoft Wed Mar 06 19:16:00 PST 2019 x86_64 x86_64 x86_64 GNU/Linux

Considering how it does its thing, it’s a lesser miracle that it works as well as it does, and it does work well.

All host partitions are mounted in /mnt and are presented as letters, in the usual DOS/Windows fashion.

danijel@DANIJEL-KANTA:~$ ls /mnt

c d e

You can symlink the host directories into your WSL home folder; for instance, Documents, Pictures, Downloads, Dropbox etc., and when you modify them from WSL, the modifications are of course visible from Windows.

danijel@DANIJEL-KANTA:~$ ln -s /mnt/c/Users/danij/Dropbox/ .

Just don’t try to access the WSL directories from Windows because that won’t end well. There are other issues: the terminal in which the WSL runs doesn’t support tabs and has the PowerShell clipboard behavior, which is “standard” only in Windows, and incredibly confusing. Also, the Linux GUI applications don’t run by default. Both those issues can be resolved.

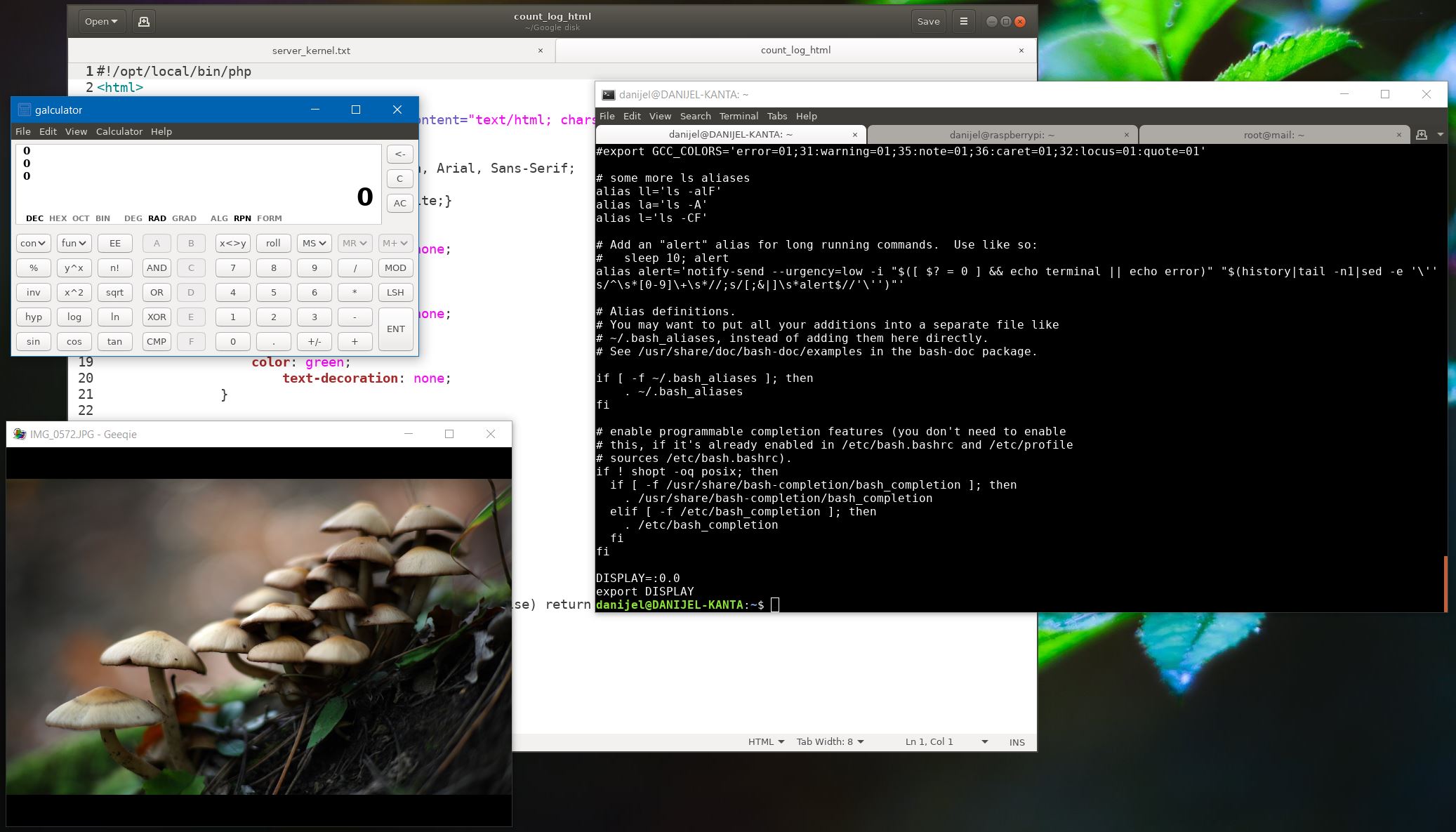

In order to run Linux GUI apps, you need an X11 server. This needs to be installed in Windows. People usually recommend XMing, but I advise against it because of the unreliable clipboard behavior. There is a version of XMing compiled in Visual C++ called VcXsrv, which solves this problem, however LibreOffice hangs when attempting to run in it, so I ended up purchasing X410 app from Microsoft store; it’s commercial and seems to work the best (edit: I had stability issues with it, it just seems to hang for no reason). Also, once you are able to run Linux GUI apps, you can install and run your Linux terminal emulator of choice, such as gnome-terminal, mate-terminal, or whatever. This solves the lack of a multi-tab terminal and gives you the expected Linux keyboard shortcuts.

sudo apt-get install gnome-terminal gedit galculator geeqie

Another problem is that the processes you start don’t detach from the terminal, which would be the preferred behavior. This can be fixed by writing the following /usr/local/bin/run script:

#!/bin/bash

$1 $2 $3 </dev/null &>/dev/null &

Make it executable with

sudo chmod +x /usr/local/bin/run

and you’re set. Of course, in order for Linux to know where to send the GUI apps, you will need to append the ~/.bashrc file with the following lines:

DISPLAY=:0.0

export DISPLAY

Also, you will need to apply the dbus fix in order for things to work properly:

sudo apt-get install dbus-x11

You should also cat /etc/machine-id to verify that it’s a valid UUID with no dashes, and if it is, you can now run your Linux GUI apps:

You start them by invoking the “run” script we wrote before:

run gnome-terminal

This works great for almost everything, but I did write a few scripts that make things quicker, such as “edit”:

danijel@DANIJEL-KANTA:~$ cat /usr/local/bin/edit

#!/bin/bash

run gedit $1

Essentially, such scripts invoke the “run” command with pre-defined parameters: gnome editor and filename in this case. You can make similar scripts for terminal, or LibreOffice writer:

danijel@DANIJEL-KANTA:~$ cat /usr/local/bin/writer

#!/bin/bash

run libreoffice --writer $1

The way to open documents is with xdg-open, but of course it doesn’t detach from terminal so you would need to write a /usr/local/bin/open script invoking run:

#!/bin/bash

run xdg-open $1 $2

As an example, this will open a PDF:

open price_list.pdf

It’s actually awesome that the application you run from WSL doesn’t have to be a Linux app, it can also be a native Windows one, and you can design your run-scripts accordingly. For instance, this version of /usr/local/bin/edit runs the Notepad++ which is Windows-native:

#!/bin/bash

/mnt/c/Program\ Files\ \(x86\)/Notepad++/notepad++.exe $1

Unfortunately, you would actually need to write such run-scripts unless you want to manually add every Windows application to PATH, because manually typing this shit every time you want to edit something is not an option.

Good news which might eliminate the need for most of these hacks is that Microsoft seems to be working on a new and improved terminal for both WSL and PowerShell, and also on WSL2 which will actually include a Linux kernel.

So, with all those hacks included, is Windows 10 a good replacement for Mac OS? I guess it depends. First of all, Mac is not really a hack-free solution if you want a usable terminal environment. It’s missing almost all useful GNU shell tools out of the box, and those need to be installed via Homebrew or Macports. Also, its terminal needs a bit of tweaking in order to look good and work well. And I still have a Linux virtual machine on my Macbook pro, just in case. And there’s occasionally that odd piece of software that happens to run only on Windows. So, whichever way you decide to go you are unlikely to avoid workarounds and tweaks. Also, Time Machine on a Mac is a lifesaver: if your Mac happens to die without a warning, you can buy a new one and simply restore it from backup, and in a few hours you’ll have a carbon copy of your old machine, fully working. With Windows, 3rd party solutions exist and work well, but the built-in backup system was trash the last time I was unfortunate enough to attempt it, and it failed to do anything useful, forcing me to do a full system rebuild from ground up, taking days to get everything right. This sounds like a little thing, but I assure you it isn’t, especially when you have work to do and your main machine is FUBARed. It’s such a big deal I’d gladly pay a bit more money for a Mac, but if a Mac is built like shit and also overpriced, I might just get annoyed enough to look for alternatives, even if they require 3rd party solutions and hacks. I do use Windows on my desktop machine, and WSL with the aforementioned tweaks works really well, but the real question is what I would do if my Macbook pro suddenly died. I guess I would still wait for Apple to fix their present SNAFU, but I’m preparing just in case they don’t.

/cdn.vox-cdn.com/uploads/chorus_asset/file/7051869/petg.0.jpg)